Beyond Automation, Toward Symbiosis: Interactions Between Humans and Artificial Intelligence

Introduction: Human-AI Interaction

We are now living in an era where artificial intelligence (commonly known as AI) is no longer a distant concept confined to research laboratories or science fiction books. Instead, it has become a reality that intertwines with our daily lives (albeit, at times, not without issues¹). We interact with AI systems daily, often without even realizing it: from voice assistants that respond to our requests to recommendations from our favorite streaming platforms about which TV series to watch when we’re bored on the couch. And here lies the first warning sign: AI is no longer just an observer learning from our behaviors but is starting to influence them.

These interactions between humans and AI go beyond merely using technology; they touch deeply on aspects of our identity, our relationships, and the decisions we make every day. These connections can provide help and support but also bring various risks and challenges, such as the loss of skills, commonly referred to in research as “deskilling².” The growing presence of AI in society raises complex questions: How do we want to relate to it? What role do we want to assign it in our lives? And how ready are we to trust decisions that, in some sense, are not entirely our own?

In this article, we will briefly explore these questions, aiming to prompt readers to reflect on what it might mean to interact with AI.

Types of Interaction with Artificial Intelligence

AI has become an invisible companion in our daily activities, enabling various services we use every day in a wide range of ways and sectors, from entertainment to healthcare, through different modes of interaction.

Undoubtedly, the most successful mode of interaction with AI in this particular period is that of chatbots and voice assistants, more generally referred to as “conversational agents,” such as the modern ChatGPT or the more familiar Siri and Alexa. This type of interaction is also used by various “assistants” found on the websites of telecom providers and other companies, offering initial support to their customers.

To better understand the different modes of interaction between humans and AI, it is useful to consider some theoretical models that analyze levels of automation. These models provide an interesting perspective on how technology can automate various tasks while varying the degree of human involvement and control.

One of the earliest models of automation, and also one of the most frequently cited, defines a one-dimensional scale of task automation levels³. This model, based on 10 levels, has two extremes: total human control (a level where the machine provides no support or automation for the specific task) and total automation (a level where the human has no role in performing the task, not even as a supervisor).

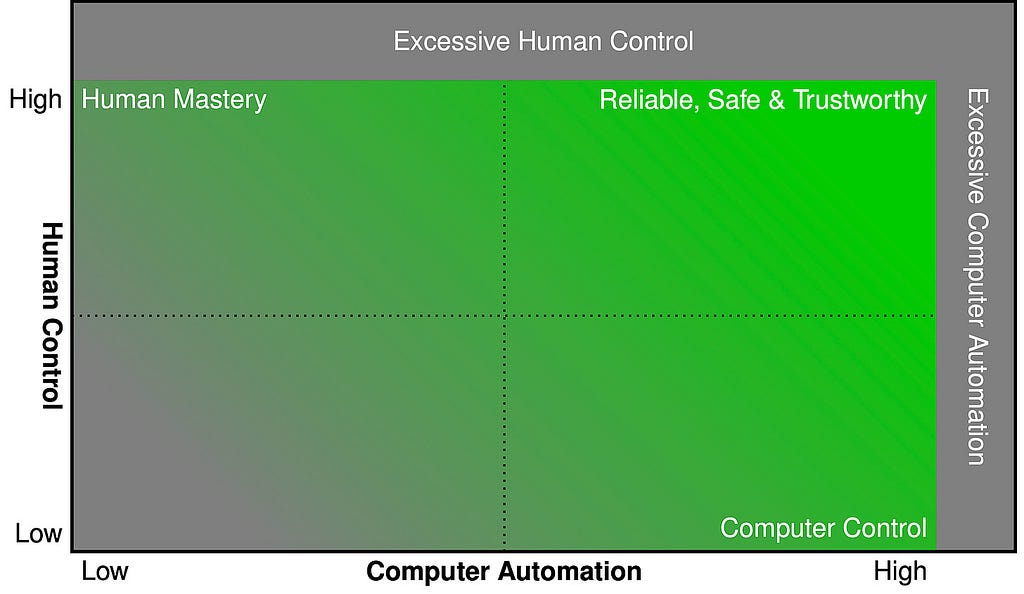

A more recent model, on the other hand, separates the degree of automation from the level of human control, offering a two-dimensional view of the relationship between humans and AI (or, more generally, machines)⁴. This approach allows for the identification of four types of systems:

- Non-automated, with high human control: systems based on the user’s skill (e.g., a piano).

- Fully automated, with minimal human control: entirely managed by the machine (e.g., car airbags).

- Low automation, with low human control: for example, a watch.

- Highly automated, with high human control: for example, an automatic camera that allows the user to adjust its settings.

This last category represents the ideal for AI systems, as it ensures reliability, safety, and trust.

It is interesting to note, however, that systems can sometimes be overly controlled by the user or excessively automated. These are cases where AI-based systems lead users to rely too heavily on them, potentially resulting in accidents, even fatal ones⁵.

The Approach Recommended by Research

In this context, several researchers have sought to validate the theories presented in the two-dimensional model of human-AI interaction. A particularly intriguing question arises: is automation truly the best way to leverage AI?

To explore this, Italian researchers (including the author of this note) investigated various ways to design artificial intelligence in the specific application context of evaluating the usability (a quality dimension) of web pages⁶. The results are particularly thought-provoking: the analysis showed that a fully automated approach was highly effective at identifying webpage issues, especially the most severe ones. This highlights the remarkable efficiency and accuracy of AI when applied to specific tasks.

However, the most intriguing insight emerged when exploring alternative approaches aimed at enhancing human capabilities rather than replacing them through task automation. By combining the refined judgment of human experts with the analytical capabilities of AI, researchers discovered a “synergy” that significantly improves the detection of lower-severity issues. This hybrid approach leverages the strengths of both humans and machines, enabling the identification of subtle problems that would otherwise elude an analysis performed solely by automation or humans alone.

This finding suggests that, while automation excels at identifying more obvious problems, integrating human expertise with AI can create a more comprehensive evaluation process. Thus, the choice of the right way to interact with AI is closely tied to the specific needs and tasks of end users — that is, us!

A Possible Future: A Symbiosis Between Humans and AI

AI is becoming increasingly present in our lives, promising numerous improvements but also exposing us to various risks. Throughout this article, we explored different levels of interaction between humans and AI, highlighting how the integration of human expertise and AI capabilities enables more comprehensive and precise outcomes, even capturing subtle details. The discovery of the potential of this “symbiosis” between humans and AI points to a promising model for the future: not automation that replaces humans, but AI that collaborates with us, amplifying our potential.

This “symbiotic” relationship between humans and AI goes beyond mere efficiency. It represents a model that combines human sensitivity and awareness with the analytical power of machines. AI can precisely solve significant and repetitive problems, but it is often humans, with their intuition and judgment, who can perceive nuances and complexities that an automated system cannot grasp on its own. This balance between autonomy and assistance gives us the opportunity to design a future in which AI and humanity collaborate to achieve results that neither could accomplish alone. In conclusion, the true value of AI will emerge not just from its technical capabilities, but from the quality of the relationship we build with it.

This post originally appeared (in Italian) in MagIA. Read the original post here.

- For example, one can refer to the various cases of accidents caused by autonomous vehicles: https://www.theguardian.com/technology/2024/apr/26/tesla-autopilot-fatal-crash

- Sambasivan, Nithya, and Rajesh Veeraraghavan. “The Deskilling of Domain Expertise in AI Development.” In CHI Conference on Human Factors in Computing Systems, 1–14. New Orleans LA USA: ACM, 2022. https://doi.org/10.1145/3491102.3517578.

- Parasuraman, R., T.B. Sheridan, and C.D. Wickens. “A Model for Types and Levels of Human Interaction with Automation.” IEEE Transactions on Systems, Man, and Cybernetics — Part A: Systems and Humans 30, no. 3 (2000): 286–97. https://doi.org/10.1109/3468.844354.

- Shneiderman, Ben. “Human-Centered Artificial Intelligence: Reliable, Safe & Trustworthy.” International Journal of Human–Computer Interaction, March 23, 2020. https://doi.org/10.1080/10447318.2020.1741118.

- See the various cases of accidents caused by autonomous vehicles mentioned earlier: https://www.theguardian.com/technology/2024/apr/26/tesla-autopilot-fatal-crash

- Esposito, Andrea, Giuseppe Desolda, and Rosa Lanzilotti. “The Fine Line between Automation and Augmentation in Website Usability Evaluation.” Scientific Reports 14, no. 1 (May 2, 2024): 10129. https://doi.org/10.1038/s41598-024-59616-0.